Abstract

With respect to model parameterization and sensitivity analysis, this work uses a practical example to suggest that methods that start with simple models and use computationally frugal model analysis methods remain valuable in any toolbox of model development methods. In this work, groundwater model calibration starts with a simple parameterization that evolves into a moderately complex model. The model is developed for a water management study of the Tivoli-Guidonia basin (Rome, Italy) where surface mining has been conducted in conjunction with substantial dewatering. The approach to model development used in this work employs repeated analysis using sensitivity and inverse methods, including use of a new observation-stacked parameter importance graph. The methods are highly parallelizable and require few model runs, which make the repeated analyses and attendant insights possible. The success of a model development design can be measured by insights attained and demonstrated model accuracy relevant to predictions. Example insights were obtained: (1) A long-held belief that, except for a few distinct fractures, the travertine is homogeneous was found to be inadequate, and (2) The dewatering pumping rate is more critical to model accuracy than expected. The latter insight motivated additional data collection and improved pumpage estimates. Validation tests using three other recharge and pumpage conditions suggest good accuracy for the predictions considered. The model was used to evaluate management scenarios and showed that similar dewatering results could be achieved using 20 % less pumped water, but would require installing newly positioned wells and cooperation between mine owners.

Résumé

En ce qui concerne le paramétrage des modèles et l’analyse de sensibilité, ce travail utilise un exemple pratique pour suggérer que les méthodes qui débutent avec des modèles simples et utilisent des méthodes d’analyses de modèle économe en calcul restent précieuses dans toute boîte à outils de méthodes de développement de modèles. Dans ce travail, l’étalonnage du modèle d’écoulement d’eaux souterraines commence par un paramétrage simple qui évolue vers un modèle de complexité moyenne. Le modèle est développé pour une étude de gestion des ressources en eau du bassin de Tivoli-Guidonia (Rome, Italie) où l’exploitation du sous-sol a conduit à un dénoyage important. L’approche pour le développement du modèle utilisée dans ce travail emploie des analyses répétées à l’aide de méthodes inverses et de sensibilité, y compris l’utilisation d’un nouveau graphique de l’importance des paramètres d’observation cumulée. Les méthodes sont fortement parallélisables et nécessitent peu d’exécution des modèles, ce qui rend possible des analyses répétées et des aperçus spécifiques. Le succès d’une conception de l’élaboration d’un modèle peut être mesuré par des aperçus des résultats et de la pertinence de la précision du modèle par rapport aux prévisions. Exemples d’informations obtenues : (1) Une croyance de longue date que, à l’exception de quelques fractures distinctes, le travertin est homogène, a été jugée comme inadéquate, et (2) le débit de pompage de dénoyage est plus critique que la précision du modèle, par rapport à ce qui était attendu. Cette dernière information a motivé la collecte de données supplémentaires et l’amélioration des estimations des pompages. Les essais de validation utilisant trois autres conditions de recharge et de pompage suggèrent une bonne précision pour les prévisions considérées. Le modèle a été utilisé pour évaluer des scenarios de gestion et a montré que des résultats similaires de dénoyage pourraient être obtenus en utilisant 20 % de moins d’eau pompée, mais nécessiterait l’installation de nouveaux puits et la coopération entre les propriétaires exploitant les ressources minérales du sous-sol.

Resumen

Con respecto a la parametrización del modelo y análisis de sensibilidad, este trabajo utiliza un ejemplo práctico para sugerir que los métodos que comienzan con modelos simples y utilizan métodos de análisis de modelos computacionalmente frugales siguen siendo valiosos en cualquier caja de herramientas de métodos de desarrollo para modelación. En este trabajo, la calibración del modelo del agua subterránea se inicia con una parametrización simple que evoluciona en un modelo de moderada complejidad. El modelo se desarrolla para un estudio sobre la gestión del agua de la cuenca del Tivoli-Guidonia (Roma, Italia), donde la minería de superficie se ha llevado a cabo en conjunción con una eliminación sustancial de agua. El enfoque de desarrollo del modelo utilizado en este trabajo emplea el análisis de sensibilidad y métodos inversos, incluso el uso de un gráfico de importancia de un nuevo parámetro de observación acumulado. Los métodos son altamente paralelizables y requieren unas pocas corridas del modelo, lo que hace posibles análisis repetidos y las interpretaciones. El éxito del diseño del desarrollo del modelo puede ser medido por las observaciones obtenidas y la exactitud demostrada por el modelo en relación a las predicciones. Se obtuvieron observaciones por ejemplo: (1) En relación con una creencia largamente sostenida de que, a excepción de una pocas fracturas claras, el travertino es homogéneo, se encontró que puede ser inadecuada, y (2) el ritmo de bombeo de la extracción de agua es más crítico para la precisión del modelo que lo esperado. Esta última explicación motivó una recolección adicional de datos y mejorá las estimaciones de volumen bombeado. Las pruebas de validación utilizando otras tres condiciones de recarga y bombeo sugieren una buena exactitud para las predicciones consideradas. El modelo se utilizó para evaluar los escenarios de gestión y mostró que los resultados de la eliminación del agua podrían alcanzarse usando 20 % menos de agua bombeada, pero requeriría instalar nuevos sitios para pozos y la cooperación entre los propietarios de minas.

摘要

针对模型参数化和灵敏度分析,本项研究利用实例提出从简单模型入手、采用计算简便的模型分析方法在任何模型开发方法中依然有价值。在本研究中,地下水模型校正从简单的参数化入手,然后进展到中等复杂的模型中。(意大利罗马市)Tivoli-Guidonia盆地露天开采,伴随着大量的排水,为当地的水管理研究开发了模型。本研究中的模型开发方法依靠灵敏度和反演法包括采用了新观测数据构成的参数重要性曲线进行重复分析。方法高度平行,需要很少运行模型,就可以进行重复分析并伴随得到认识结果。模型开发设计的成功可以通过获得的认识结果及展示的与预测相关的模型精确度来衡量。实例获取的认识结果有:1)除了几个明显的特点,石灰华是均质的这一长期持有的观念是不充分的,2)排水抽水速度对模型精确度来说比预想的更重要。后者的认识促使收集额外的资料,提高抽水量估算值精度。采用三个其他补给和抽水条件进行的校正试验对预测具有很好的精确度。模型用于评估各种管理方案,模型还表明,采用少于20%的抽水量可以获取类似的排水结果,但这需要打新定位的井及矿主之间的合作。

Resumo

Com respeito a parametrização de modelo e análise de sensibilidade, esse trabalho usa um exemplo prático para sugerir que métodos que se iniciam com modelos simples e usam métodos de análise do modelo computacionalmente frugal continuam a ser valiosos em qualquer caixa de ferramentas de métodos de desenvolvimento do modelo. Neste trabalho, calibração do modelo de águas subterrâneas começa com uma parametrização simples que evolui para um modelo de complexidade moderada. O modelo é desenvolvido para um estudo de gestão dos recursos hídricos da bacia do Tivoli-Guidonia (Roma, Itália) onde a mineração de superfície tem sido conduzida em conjunto com uma drenagem substancial. A abordagem para desenvolvimento do modelo utilizada nesse trabalho aplica análises repetidas utilizando análise de sensibilidade e métodos de inversão, incluindo o uso de um novo gráfico de importância das observações empilhadas. Os métodos são altamente paralelizável e exigem algumas realizações de modelo, que fazem as análises repetidas e compreensão de atendimento possíveis. O sucesso de um esquema de desenvolvimento de modelo pode ser medido por percepções alcançadas e demonstrou a precisão do modelo referente às previsões. Foram obtidos entendimentos, como por exemplo: (1) A antiga crença de que, com exceção de algumas fraturas distintas, o travertino é homogêneo foi considerada inadequada, e (2) A taxa de bombeamento de rebaixamento do lençol é mais crítica para modelar precisão do que o esperado. Este último entendimento motivou a coleta de dados adicionais e melhores estimativas de bombeamento. Testes de validação com três outras condições de recarga e de bombeamento sugerem boa precisão para as previsões consideradas. O modelo foi utilizado para avaliar cenários de gestão e que mostrou que resultados similares de drenagem podem ser alcançados utilizando 20 % menos água bombeada, mas pode requerer a instalação de novos poços posicionados e cooperação entre os donos de minas.

Similar content being viewed by others

Introduction

Geologic and hydrologic data and knowledge have generally been unable to characterize groundwater systems fully enough to produce models useful for goals such as resource management without model calibration. That is, models produced using only field data and knowledge about model inputs can be shown to be inadequate, even in highly controlled and sampled circumstances (e.g., Barth et al. 2001; Barlebo et al. 2004; Liu et al. 2010; Clement 2011; Gupta et al. 2012). Thus, models tend to be calibrated such that better reproduction of measured responses is achieved, with the hope that predictive ability will be improved.

The model calibration steps of concern in this work are parameterization, sensitivity analysis, and inverse modeling. Two recent discussions about how to increase the likelihood of attaining accurate models have focused on

-

1.

How to use the continuum of simply to highly parameterized groundwater models

-

2.

How to use the continuum of sensitivity analysis and inverse modeling methods that range from computationally frugal (often take 10’s of model runs), which require assumptions about model smoothness and Gaussian errors, to computationally demanding (often 1,000 to 10,000 models runs or more) and few required assumptions

For the parameterization discussion, the basic principle is shown in Fig. 1. Parameters are used to define spatially and temporally distributed system properties, boundary conditions, stresses, and possibly other aspects of the system. Defining parameters provides an interface between the computer model, which may have a huge number of individual values that need to be defined, and the conceptual model that represents how the system is understood. Parameter definition is generally implemented using lengths, areas, or volumes of constant value (zones) able to represent features that vary abruptly (such as some geologic facies), simple interpolations able to represent specific features that vary gradually (such as deltaic deposits), and complex compilations of discrete or interpolated values often based on indicator or traditional kriging. The parameters define “parameter space”, with dimensions equal to the number of parameters. For three parameters, parameter space can be envisioned as a three-dimensional (3D) Cartesian coordinate system. When there are more parameters, a hyperspace is created. In Fig. 1 “structure dominated” models generally have few parameters that are defined based on geologic and hydrologic knowledge or hypotheses (e.g., Gingerich and Voss 2005; Hill 2006; Foglia et al. 2013). “Observation dominated” models generally are highly parameterized and the number of model parameters used to represent the system can be greater (often much greater) than the number of observations (e.g., Hunt et al. 2007; Hill 2008; Doherty and Welter 2010; Dausman et al. 2010; Doherty and Christensen 2011). In groundwater models, most often the hydraulic conductivity field is highly parameterized. There are many additional discussions of parameterization in the literature. Of note are Refsgaard (1997), de Marsily et al. (2005), Carrera et al. (2005), Kirchner (2006), Clark et al. (2008), Hastie et al. (2009), Refsgaard et al. 2012, Hill et al. (2015), and Best et al. (2015). Models with any number of parameters can have insensitive and/or interdependent parameters, and thus be ill-posed. To attain a tractable inverse problem, some combination of defined-value or smoothing regularization, or setting of process-model or SVD (singular value decomposition) parameters are used (Tonkin and Doherty 2009; Hill and Tiedeman 2007; Hill and Nolan 2011; Aster et al. 2013; Poeter et al. 2014). Definition of parameterization type based on the number of parameters is useful, but can be misleading. The interested reader is encouraged to consider, for example, Fienen et al. (2009) and Hill (2010).

Generally expected tradeoff between model fit to observations and prediction accuracy for models dominated by structural information (at the extreme, using very few model parameters) and observations (at the extreme, using thousands of model parameters). For groundwater models, structure is often defined using geologic data and processes. (modified from Hill and Tiedeman 2007, p. 269 and references cited therein)

For the second discussion, computationally frugal sensitivity analysis is dominated by what are called local methods and inversion is generally conducted with a Levenberg-Marquardt method (e.g., Anderman et al. 1996; Finsterle and Preuss 1995; Poeter and Hill 1997; Hill and Tiedeman 2007; Foglia et al. 2009). Strictly local methods provide an analysis for one set of parameters values, and of concern is whether solution discontinuities, also called “numerical daemons”, produce erratic results, and how to account for results that vary over parameter space, as expected when models are nonlinear with respect to the parameters (e.g., Beven 2009; Saltelli et al. 2008; Kavetski and Clark 2010; Rakovec et al. 2014; Razavi and Gupta 2015). This work reports results of strictly local methods, and tests for erratic and variable results over the course of model development. Finally, some of the sensitivity analysis methods used in this work have been criticized by proponents of alternative local methods (Doherty and Hunt 2010; Finsterle 2015); and some of these concerns are discussed in the methods sections. For the Levenberg-Marquardt inverse method, of concern is that as a gradient-based method it can become trapped by local minima. In this work, tests for local minima are conducted by starting the inversion from alternative starting sets of parameter values.

Computationally demanding methods are usually classified as global (e.g., Borgonovo 2006, 2007; Saltelli et al. 2008; Kucherenko et al. 2009, 2012; Keating et al. 2010; Laloy and Vrugt 2012). They often require 1,000 to 10,000 s of model runs, and even more, but two global sensitivity-analysis methods can be conducted with few model runs: the method of Morris and elementary effects (Morris 1991; Saltelli et al. 2008). Saltelli and Funtowicz (2014) suggest using only global methods for sensitivity analysis, and only at the end of a study to identify sources of uncertainty. A problem with global methods is that, as commonly used, they produce results that are averages for all or large parts of parameter space. Though such results are stable numerically, such averaging can obscure important relations between model processes or characteristics and simulated results (Rakovec et al. 2014). To ensure that important relations are not obscured, to make it convenient to obtain evaluations routinely throughout model development, and because evaluation of results from local methods here and in other studies (Foglia et al. 2007; Lu et al. 2012, 2014) indicated good performance, global methods are not used in this work.

Advances in difficult problems such as how to best pursue model development and achieve more transparent, falsifiable (or at least well-tested) models for complex systems (Sokal 2009) often come through examples like that presented in this work (Oreskes 2000). For example, Doherty and Christensen (2011) use a synthetic highly parameterized groundwater problem and demonstrate that accurately predicting quantities that differ significantly from observed values is very difficult. Foglia et al. (2013) use a simply parameterized field problem and found that models with the most erroneous predictions were associated either with very poor fit to observations (as measured by the sum of squared weighted residuals) or with poorly posed inverse problems characterized by trying to estimate parameters with very small sensitivity measures and high interdependence, both of which are measured in this work. Better predictions were obtained using more simply parameterized models. Recently, more philosophical papers have also addressed how to conduct modeling of environmental systems. The goal of model pedigree and the problem of whether models of complex, interacting processes can be trusted is discussed by Funtowicz and Ravetz (1990) and Saltelli and Funtowicz (2014, 2015). In this work, these two issues are, in part, addressed by using a modularly constructed, open-source, public domain, commonly used program (in this case MODFLOW, Harbaugh 2005). In addition, the clear description of the geology and its full documentation in reports, as achieved in the study of the Tivoli-Guidonia basin, are critical to addressing these concerns.

The model used in this work is developed for a water management study of the Tivoli-Guidonia basin (Rome, Italy), which is characterized by two aquifers, one partially confined in a limestone bedrock and one in a surficial travertine plate. The system has been surface-mined for centuries, most recently in conjunction with substantial dewatering. Model calibration is conducted starting from a simple model (from the left in Fig. 1) using computationally frugal sensitivity-analysis and inverse methods. The calibration process produces a back and forth exchange of information between a hydrogeologic conceptual model and the head and flow data, so that the conceptual model is updated based on the quantitative analysis provided by the model. The accuracy of the Tivoli-Guidonia basin model is tested (often called validated) using data sets from three alternative hydrologic conditions. The model is then used to evaluate management alternatives for dewatering the travertine mines.

Geological and hydrogeological setting of the field study

The flat plain of the Tivoli-Guidonia basin (known also as Acque Albule Plain) is located close to Rome, Italy, near the foothills of the central Apennine Chain. It is largely covered by travertine deposits (Fig. 2). The Romans quarried the travertine and used it on the facades of the main imperial age Roman buildings. The study domain is bounded by the Lucretili Mountains to the east, the Cornicolani Mountains to the north, the Colli Albani Volcano to the south and low hills to the west (Fig. 2). Land elevation of the area ranges from 80 masl (meters above sea level) in the northeast to 45 masl in the southwest. The main watercourse of the basin is the Aniene River, which flows into the Tiber River in Rome just a few kilometers downstream (La Vigna et al. 2010, 2016a, b). General geologic information about the Acque Albule Plain is presented in previous and recent studies of surface geology and stratigraphy (Maxia 1949, 1950; Manfredini 1949; Faccenna et al. 1994, 2008) and geological maps (Giordano et al. 2009).

The Tivoli-Guidonia plain model area (outlined in red) with simplified surficial geology and drainage network to show the regional setting of the basin. The black line locates the conceptual model section shown in Fig. 3. Autostrada Spring (PIL), Alberocaduto Spring (ALB) \and Barco Springs B1 and B2. P1–P7 refer to the monitored probes. Observations EL, MG, and SG are shown in Fig. 3

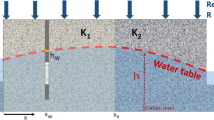

The travertine deposit is up to 80 m thick and overlies either (1) previous volcanic products mostly on the margins of the study area or (2) Pliocene sediments characterized by marine clay at the bottom and earlier sand and gravel of continental environment. The Pliocene sediments overlie Mesozoic carbonates, outcropping along the north and east of the modeled area in the Cornicolani and Lucretili mountains, and lowered by important faults in the center of the plain (Fig. 2).

Hydrothermal activity still occurs, as indicated by upwelling of gas and mineralized water at 23 °C temperature (versus average ambient values of about 15–16 °C) and electric conductivity of about 3,000 μS/cm (ambient values are 500–700 μS/cm). The mineralized water upwells in the Regina and Colonnelle lakes (known as Acque Albule Springs; Fig. 2) and in several minor springs in the plain (Autostrada Spring, Alberocaduto Spring and Barco Springs, respectively labeled as PIL, ALB, B1 and B2 in Fig. 3). Flows of up to several m3 per second occur at these springs. The hydrothermal groundwater mixes in the phreatic aquifer with the superficial cool water and flows out of main hydrothermal springs and open fractures into the travertine quarries (Petitta et al. 2010; Carucci et al. 2011). Another important discharge point in this system is the Acquoria Spring (ACQ in Fig. 3) related to the karst system and located to the east of the plain, with an outflow of about 103 L/s.

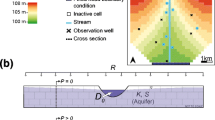

The Tivoli-Guidonia groundwater model structure. A reduced conceptual model proposed by La Vigna et al. (2012a) that combines units shown in Fig. 2 is used to discretize the geology. Division of the geology into the three model layers and the location of the observations used in regression are shown. EL and MG are about the Carbonate aquifer, layer 3, and refer to bedrock heads. MODFLOW packages used to define the model boundary conditions are listed for every layer (layer 2 does not have any specific associated package)

Recharge occurs in the Cornicolani (north of the modeled area) and Lucretili (east) mountains. Rainfall infiltrates into the fractured and karst system to the saturated zone of the carbonates. Direct-rainfall and horizontal flow from the outcropping carbonates along the northern and eastern sides to the surficial travertine aquifer defines a minor contribution. The “Pozzo del Merro” karst lake (Cornicolani Mountains; Fig. 2), one of the deepest karst caves in the world, has an average head of 80 masl (Baiocchi et al. 2010) and is used to provide a reference head for the whole Mesozoic carbonate system. The 80–40-masl land elevation levels in the investigated domain produce artesian conditions in the confined bedrock system beneath the travertine.

Large variations in recharge to the deep carbonate system over time due to dry seasons or climate change influence the saturation of the superficial system, demonstrating a direct connection between the two aquifers (Capelli et al. 1987; La Vigna 2009, 2011; La Vigna et al. 2012a, b) and that upwelling groundwater constitutes the predominant recharge of the surficial system.

Hydrogeologic conceptual models for the system based on the aforementioned geological knowledge were developed by Capelli et al. (1987), La Vigna (2009, 2011) and La Vigna et al. (2012a). In this work, conceptual models are tested quantitatively by using them to construct quantitative MODFLOW models. The ability of a quantitative model to reproduce measured heads and flows with reasonable parameter values indicates support for the conceptual model. Difficulties can be used to discredit and possibly improve the conceptual model.

The most recent conceptual model of the system is described by La Vigna et al. (2012a) and defines three main hydrostratigraphic units. The bottom unit consists of karstified limestone bedrock (the Mesozoic Carbonates). The middle unit consists of the marine and continental Pliocene deposits. The top unit is defined by the travertine, a fissured/fractured and locally karstified aquifer, with locally Holocene alluvia and volcanic products at the top. Thus, the groundwater system is conceptualized as being composed of two fissured/fractured and karst aquifers interbedded and hydraulically divided by a thick low-permeability Pliocene confining unit allowing upward mineralized water flows along fault zones. The flow system is dominated by regional upwelling of warm mineralized water to the phreatic top aquifer. This water enters the system as recharge to the basal carbonates in outcrop areas.

The large quantity of water flowing up through the fractures has to be removed from the quarries to enable travertine excavation. The Roman quarriers encountered the water table a few meters below ground level, which limited their mining. Since World War II, progressively larger submersible pumps have allowed substantial dewatering and additional mining of the travertine. In addition, local hydrothermal water flows from the carbonate are increasingly used to supply public pools. Thus, the groundwater system has been exploited extensively. Rome has expanded so that civil buildings, commercial activities, farms, and thermal centers occupy the area. Dewatering in the mined area is causing conflicts among water users. The main aim of the model described in this work was to explore possible ways to improve water resource management by reducing dewatering.

Methods

This section first describes the existing hydrologic data and how observations and related weighting used to judge model performance were obtained. Next, methods for groundwater model development are presented. Finally, the model analysis methods used to identify important and unimportant parameters and observations and to calibrate the model are described.

Hydrologic data, observations, error analysis, and weighting

Thirteen heads and five flow observations are used in model calibration. The observations are chosen from a larger set of data obtained during a detailed hydrogeologic survey in February 2008 (La Vigna 2009; La Vigna et al. 2012a). The survey consisted of 82 head and water-quality measurement points. Seven of these points were part of a monitoring network of the travertine aquifer (locations are shown in Figs. 2 and 3) recording hourly water levels since 2006 (La Vigna et al. 2007). Most survey points investigated the surficial aquifer. Three investigated the bedrock: two in the northern border of the plain (these and all travertine aquifer survey points which were not selected as observations are not shown in Fig. 2, while all points selected as observation are shown in Fig. 3), and one is the Pozzo del Merro Lake in the Cornicolani Mounts (black and orange triangle in Fig. 2). Measured heads show a piezometric pattern with a large cone of depression in the quarry area and relatively high levels at the main hydrothermal Regina and Colonnelle lakes. Head measurements obtained using water-level dippers can be affected by elevation accuracy of the survey point, experience of the surveyor, instrument precision, and dynamics of the water table.

In the study area, dewatering activities produce large spatial and temporal variations in the water-table elevation. La Vigna et al. (2012a) showed how the spatial and temporal variability of groundwater levels of the Acque Albule Plain change over time. To evaluate temporal variability in measured heads, data from seven probes monitored hourly (P1–P7 in Fig. 2) were used to calculate monthly standard deviations. These mostly ranged from 0.1 to 0.5 m with a maximum of 0.9 m in January 2010. The values from the individual probes are then averaged. The survey period (February 2008) serendipitously occurred in a low variability time (standard deviation = 0.1), so the errors resulting from water-table fluctuations are small. Inspection of the long-term groundwater level hydrographs suggests the average heads in this month-long period were consistent with long-term average pumping conditions. As such, steady state provides a useful approximation because the dewatering has been, in a time-averaged sense, steady over a long period of time relative to how long the system takes to reach equilibrium. The predictions involve potential long-term changes to these pumping rates, so predictive runs also are well served by steady-state simulations. Thus, based on an analysis of the data and the purpose of the model, a steady-state model was used for model development and prediction.

Of the 82 wells for which head data existed, 13 were measured more than once during February 2008 and heads at these locations were used to produce observations for model calibration. At these wells the number of measurements from February 2008 range between 4 (in six wells manually measured) and 672 (in the seven monitoring probes).The average heads ranged from 60 to 39 masl at these wells, and the temporal averages were used as observations in the regression. The standard deviation related to each average ranged from 0.1 to 0.5 m. The other 69 head data were not used as observations because the temporal variability could not be mitigated through averaging; however, informal comparison to simulated results were conducted and suggested basic consistency.

Discharge at five springs (locations are show in Fig. 3 and they are listed in Table 1) was measured using the velocity area method as described in the International Standards (2007). Channel flow discharge was measured to determine the total amount of quarry dewatering, which equaled about 350,000 m3/day. Discharge flow measures performed by the velocity area method can have standard deviations equal to about 20–25 % of the flow for large rivers (Di Baldassarre and Montanari 2009), while for little streams the uncertainty percentage is as small as 5–10 % (Carter and Anderson 1964). The measured flows may reflect some mixing with phreatic water, while the simulated flows all come from depth. This tends to create a bias, with measured flows being larger; however, the magnitude of the phreatic flow is thought to constitute a small percentage and no bias correction is applied. Flow measurement uncertainties of 20 % are used to define the observation weights.

Direct aerial recharge data to the system and distributed (diffuse) withdrawals from many small wells were obtained from the work of Capelli et al. (2005), and are discussed in the following under section “Groundwater model development”. They calculated an average recharge rate of 3,600 m3/s considering the entire plain and recharge area of the Cornicolani and Lucretili Mountains, and an outflow rate due to diffuse withdrawals of about 0.7 m3/s.

This work uses error-based weighting of head and flow observations (e.g., Hill and Tiedeman 2007; Foglia et al. 2009; Renard et al. 2011; Hill et al. 2013, 2015). Observation weighting can be pursued using a variety of perspectives (e.g., Lu et al. 2013; Anderson et al. 2015). Error-based weighting is the only method that can be shown theoretically to produce minimum variance parameter estimates (Hill and Tiedeman 2007, their Appendix C). Having a philosophy such as error-based weighting provides a framework to define weights and place exceptions in perspective. Indeed, trying exceptions is a mechanism for testing alternative conceptual error models. In this work, weights are defined using the evaluation of errors described in this section of the report and the weights were kept at these values throughout model development. The statistics are summarized in the caption of Table 2.

Groundwater model development

The investigated domain was simulated using MODFLOW-2005 (Harbaugh 2005) in steady state. The model was initially built according to the La Vigna et al. (2012a) conceptual model, which is based on surficial geology, well logs, subsurface features revealed through mining, and hydrologic information such as spring locations.

The active domain covers the plain area (55 km2; see Fig. 3). Model cells are 100 m on a side, and the grid has 90 rows and 83 columns. Following the previously presented conceptual model, the system was simulated using three model layers defined using the Layer Property Flow (LPF) Package (Fig. 3). Model layers mostly correspond to hydrostratigraphic units. Where these outcrop (as for carbonates and Pliocene marine clay), the same hydraulic properties were assigned to the corresponding cells of upper layers.

Layer one, bounded at the top by the topographic surface, represents the travertine plate in the center, and recent alluvial sediments around the edges. The limestone bedrock outcrops in the north and east sides, and the Pliocene deposit outcrops in the west side of the area. Most of layer two is bounded at the top by the contact of the Pliocene unit below and the travertine deposit above. Layer three is composed of limestone bedrock. Hydrogeologic unit top and bottom surfaces were defined by means of interpolation methods using more than 100 stratigraphic logs and geophysical data (vertical electrical soundings; La Vigna et al. 2012a).

The jointed/fractured travertine of layer one and the limestone bedrock are dominated by secondary porosity such as fractures. Numerous small fissures are expected to occur within nearly all cells, and justifies use of the equivalent porous media approach in MODFLOW (La Vigna et al. 2009b; Wellman et al. 2009; Anderson et al. 2015). Large fractures are important to the associated spring flows, and are represented explicitly. From this beginning, the structure and hydraulic properties were calibrated based on hydrologic field data. Alternative fracture locations (La Vigna et al. 2013a) were used to evaluate their impact on groundwater flow.

Few local well tests were available to derive hydraulic conductivity values. To begin, hydraulic conductivity values were set equal to the middle of general hydraulic conductivity ranges for similar medium reported by Spitz and Moreno (1996) and listed in Fig. 4. The literature ranges tend to extend 2–3 orders of magnitude above and below the starting values for the types of subsurface material considered. The central area of layer two is characterized by very low hydraulic conductivity cells because it is mainly characterized by clay. Larger hydraulic conductivity values occur where faults correspond to spring flow. Hydraulic conductivity values of the initial and the calibrated models are listed in Fig. 4, and the parameter description and names are listed in Table 1.

Hydraulic conductivity zones, values and parameters of the initial and calibrated Tivoli-Guidonia model. Colors of lithologies correspond with Fig. 3 and are also used in Figs. 10 and 11. Hydraulic conductivity parameter names are, for example, CAR-KX, TRH-KY, and so on. TRL-KY and TRL-KZ are combined because estimation ended up with the same values

A constant head of 80 masl was applied all around the active domain in layer three, which consists of the carbonate bedrock. This head is consistent with the level of EL and MG survey points and with the “Pozzo del Merro” karst lake level, considered as the head of the saturated carbonate system, which is consistent with the widely held view that head loss through the regional carbonate aquifer is small (Capelli et al. 2013; Worthington 2009). Heads EL and MG are used as observations to make it easy to check simulated values in layer 3. Flows through the constant heads in the lower model layer are evaluated to ensure they remain reasonable when pumping is applied. Layer two has no flow boundaries all around the low hydraulic conductivity Pliocene sediment domain.

In model layer one, the Aniene River is simulated using the River Package, and borders the southern limit of the area. The intense dewatering of quarrying activities was simulated using the Well Package. Less well-defined and smaller withdrawals from wells for agriculture, industry and domestic use were simulated with the Recharge Package, in which the applied recharge rate was set equal to the effective rainfall recharge minus the pumping rate (Fig. 5) according to the work of Capelli et al. (2005).

The springs in the plain were simulated using the Drain Package. The Drain Package is set up to enable water from model layer three to flow to the elevation of model layer one. The four spring observations are labeled ACQ, PIL, ALB, B1 and B2 in Fig. 3, and the surficial heads in the travertine are labeled P1–P7. Heads in the alluvial and recent sediments are labeled PA, PB, and PC, and bedrock heads labeled as EL and MG. Particle tracking using MODPATH (Pollock 1989) was performed to reveal how flow from the deep system moves into and through the shallow system under different pumping scenarios.

Parameterization, sensitivity analysis and calibration

The parameters defined for the final Tivoli-Guidonia basin model are listed in Table 1. Figures 1 and 3 show the location of the streams, Fig. 3 shows the distribution of the hydraulic conductivity parameters, while Fig. 4 lists the hydraulic conductivity parameters and Fig. 5 shows the imposed distribution of areal recharge and pumpage from minor wells; no parameters related to this distribution are defined. A flowchart of the calibration process is shown in Fig. 6. Parameterization began simply, and progressed to the definition of 31 parameters. Of the 31 parameters, 24 are used to calculate the hydraulic conductivity distribution, in part because the materials were found to be more anisotropic than originally hypothesized.

Three primary sensitivity analysis methods are used in this work. The importance of individual parameter values to the entire set of observations, which indicates the ability to estimate each parameter in the regression, is measured with composite-scaled sensitivities (css) calculated for each parameter. Parameter interdependence (one cause of parameter aliasing) is measured with parameter correlation coefficients (pcc) calculated for each parameter pair. The importance of individual observation importance to the set of parameters is measured with leverage statistics calculated for each observation. The equations for these measures are as follows:

where j and i identify a parameter and observation, respectively, b j is the value of the jth parameter, np is the number of parameters, n is the number of head and flow observations, X is a sensitivity or Jacobian matrix with n rows and np columns and components equal to ∂y i ′/∂b j ′ where y i ′ is the simulated equivalent of the ith observation and b j is the jth parameter. ω is the observation weight matrix, and in this work has nonzero terms on the diagonal equal to the reciprocal of the observation error variance, s i 2. Values of standard deviations (s i ) and coefficients of variation are defined in section “Hydrologic data, observations, error analysis, and weighting”. Variance is calculated as the square of a standard deviation or as the square of the product of a coefficient of variation and the observation value. In Eq. (2), the v ij are off-diagonal terms and v ii and v jj are diagonal terms of the posterior parameter variance-covariance matrix calculated here using linear theory as V(b) = s 2(X T ωX)−1. In Eq. (3), the x i is one row of the X matrix, so is a vector of the derivatives for one observation relative to each parameter. For convenience, the subscripts are omitted when referring to css, pcc and leverage in the text. These statistics are documented in Cook and Weisberg (1982), Helsel and Hirsch (2002), Hill and Tiedeman (2007), and elsewhere, but they are far from routinely used in the modeling of environmental systems.

The css are scaled by the parameter value b j because the results perform well in identifying which parameters can and cannot be estimated by regression, given the model types of parameters considered in this work. If css is thought of as a signal to noise ratio, the last expression in Eq. (1) suggests that the signal can be approximated as the sensitivity times 1 % of the parameter value, which has the same units as the observations. The noise can be approximated as 1 % of the observation standard deviation (when error based and with diagonal ω, the square-root of the weight equals the reciprocal of the observation standard deviation). This also has the units of the observation and the resulting signal to noise ratio is dimensionless. Parameters with css larger than 1.0 have, on average, sufficient signal to overcome the noise, based on this measure. In the absence of parameter interdependence, parameters with larger css tend to have smaller posterior coefficients of variation (posterior parameter standard deviation divided by the estimated value). Parameters with css values larger than 0.01 times the largest css can generally be estimated. Alternative parameter scalings by, for example, the parameter standard deviation are suggested by Hill and Tiedeman (2007), Finsterle (2015), and others, and are of considerable interest. Of concern when using either the parameter value or a standard deviation for scaling is that the values change during model development. The parameter value clearly changes, and the standard deviation changes unless only a priori standard deviations are used. In the latter case, determining the a priori value can be problematic. To some degree the biggest impact of the scaling is to make up for what may be very large differences in parameter magnitude, any scaling that accomplished this task is likely to provide useful results. When parameter values changes expressed as percent of the parameter value are of interest, as in this problem for which reasonable parameter value ranges can vary over an order of magnitude or more, scaling by the parameter value makes sense. For some types of parameters, scaling by the parameter value produces special problems, but such parameters are not considered in this work (for details see Hill and Tiedeman 2007 or Finsterle 2015).

This work presents a new css graph that shows the contributions from user-defined observation groups to the importance of each parameter. The method is described by Poeter et al. (2014). In this work the observation groups are defined as shown in Table 2. The graph is shown in Fig. 7a.

Evaluation of parameter sensitivity and interdependence (correlation) based on the 18 observations. a Composite-scaled sensitivities (css) for all 31 defined parameters and b parameter correlation coefficients greater than 0.85 for the 12 parameters with the largest css values (marked by an asterisk in part a). The regression estimated these parameters except for SED_KY which was set to SED_KX because of the high correlation (b). The sensitivity analysis results are calculated using the initial values as listed in Table 1 and required 63 model runs. KX, KY, KZ are hydraulic conductivity along model rows, columns, and vertically, respectively. FAH, TRL, TRH, CAR, SED, FAL, CLA, and CLH are defined in Table 1. The other parameters are defined in Table 2. Observations groups and weighting are defined in Table 2. Values are calculated for the starting parameter values

Global measures comparable to css are first-order eFAST or Sobol′ statistics, method of Morris, and elementary effects (Morris 1991; Saltelli et al. 1999; Saltelli et al. 2008). Given the consistent css obtained in this work, one would expect global results to produce results similar to those reported in this work.

The pcc values vary between absolute values of 0.00 and 1.00, where absolute values close to 1.00 indicate parameter interdependence and at least locally nonunique parameter estimates, given the constructed model and the observations and prior information provided. Mathematicians may note that, theoretically, values of 1.00 should not be attainable because the matrix would be singular; however, the round-off typically in practice often allows this calculation to occur. Absolute values smaller than 1.00 can mean less interdependence between parameters or numerical difficulties such as inaccurate sensitivities, so only values close to 1.00 conclusively indicate non-uniqueness (Hill and Østerby 2003). Absolute values close to 1.00 throughout parameter space suggest pervasive parameter interdependence. Interdependence between groups of parameters can be identified by pcc absolute values close to 1.00 between all parameter pairs in the group. In practice, absolute values larger than 0.95 are considered to suggest potential non-uniqueness, which can be tested by whether regression runs starting from different parameter values converge to similar parameter estimates, where similar is relative to the a posteriori parameter standard deviation. The pcc results are comparable to evaluations obtained by comparing first- and total-order effects in global methods such as eFAST and Sobol′ (Hill et al. 2015). The pcc graph is shown in Fig. 7b.

The css and pcc, along with system information, are used to decide which parameters are informed enough by available observations to be estimated by inversion. Alternatively, SVD and the ID statistic, which also are also functions of X and ω, can be used as described by Aster et al. (2013) and Anderson et al. (2015), and similar results would be expected (Poeter et al. 2014).

Leverage statistics vary between 0.0 and 1.0. A value of 1.0 indicates that the observation completely controls the value of one or more parameters, though it may also contribute information to other parameters. Progressively smaller values identify observations that are progressively either more redundant for all parameters or less important overall (Cook and Weisberg 1982). One consequence of redundancy is that observation errors are conveyed less directly into estimated parameters. In general, an observation is more likely to have high leverage if its location, time, circumstances or type provides unusual information on the parameters. Comparable statistics can be obtained using the more computationally demanding method of one-at-a-time cross-validation, in which observations are removed and the inversion repeated (e.g., Good 2001; Foglia et al. 2007).

Inversion consisted of finding parameter values that produce the smallest value of a weighted least-squares objective function—∑ω ii (y obs i − y ′ i )2—using Levenberg-Marquardt. This is a gradient-based method and regressions were repeated from different sets of starting parameter values to detect difficulties with relevant local minima. UCODE_2014 and its post processors (Poeter et al. 2014) were used to perform sensitivity analysis and calibration (La Vigna et al. 2009a, 2011).

Limitations and advantages

The Tivoli-Guidonia model has some limits. The large cell size simplifies the system, the nature and distribution of all sediments below the travertine aquifer are not well known. The hydrothermal heat transport and relative viscosity and buoyancy variations are not simulated, but the temperature difference between fresh and thermal water is small in this system (from 15–16 °C to a maximum of 23 °C). The neglected adjustments are small and inconsequential for this study. A main advantage of the Tivoli-Guidonia model is that predicted quantities and circumstances are similar to observations (Doherty and Christensen 2011). The three tests described in the following suggest that the model can be used for the intended purpose of system characterization and evaluation of pumpage distribution and rates used for dewatering.

Results and discussion

Three results are presented and discussed. First, the model runs were examined for any indication that the computationally frugal model analysis methods were inadequate. Second, selected model calibration and sensitivity analysis results and consequences for data collection and alternative model consideration are presented. Third, model accuracy is tested by considering alternative flow conditions and results are presented for a pumpage management scenario.

Examine model runs for evidence that the methods used might be inadequate

The computationally frugal methods used in this study have underlying assumptions that can produce misleading results in some circumstances (also noted, for example, by Saltelli et al. 2008; Kavetski and Clark 2010; Saltelli and Funtowicz 2014). For the results provided in this work, the concern for sensitivity analysis is mostly the assumption that the solution is smooth with respect to the parameters in the range of parameter values and model construction alternatives considered. The assumption of smoothness is required to calculate valid local sensitivities—the terms of the X matrix of Eqs. (1)–(3). The stricter assumption of linearity is required to calculate the same sensitivities throughout parameter space, but this rarely occurs in practice. Indeed, the variation of sensitivities within parameter space can reveal important system dynamics (Rakovec et al. 2014). For inversion, the effects of local minima can produce inaccurate results. To avoid reporting misleading or inaccurate results, efforts to calculate sensitivity analysis measures of parameter and observation importance and estimate parameter values were evaluated for evidence any difficulties. The parameter and observation importance measures provided results similar to those in Figs. 7 and 8 for the many sets of parameter values considered during model development. The greatest variation occurred in the pcc, which are discussed with the pcc results in the following section of this article. For css and leverage, the most and least important parameters and observations remained the same for the range of models and parameter values considered. A more thorough analysis could have been conducted with DELSA (distributed evaluation of local sensitivity analysis; Rakovec et al. (2014), but the results obtained indicate that the number of repetitions conducted during the model development process provided adequate testing. The optimal parameter values and model fit shown in Fig. 4 and Table 2 were similar obtained from different sets of starting parameter values; thus, there was no evidence of multiple minima, which is not surprising given the MODFLOW model is known to have few numerical daemons.

Evaluation of observation dominance as measured using leverage. When leverage equals 1.0, the observation completely controls the value of one or more estimated parameters. Zero indicates the observation does not contribute to estimation of parameter values. Evaluation of dimensionless scaled sensitivities (not shown) indicate that the flow observations ACQ, PIL, ALB, B1 and B2 control estimation of parameters TRL_KZ, FAH_KZ or FAL_KZ. The PC observation had smaller leverage values for other parameter sets, so the dominance indicated in this graph was not generally applicable and does not reflect a pervasive simulated dynamic. Here, the head observations related to the deep aquifer (EL and MG, see Fig. 3 for location) do not contribute to parameter estimation. Calculated at the starting parameter values

Model analysis and resulting added data collection

The calibration process flowchart of Fig. 6 indicates that an initial very simple model was clearly inadequate. Ten parameters were added that mostly were used to consider hypothesized variability and anisotropy of the hydraulic conductivity field and quantify the importance of pumpage. Graphs such as those in Figs. 7 and 8 were evaluated. Given the importance of the pumping rates and evaluation of model fit to observations, new pumping rate data were collected. It was found that previous values had generally been too low.

Subsequent calibration showed that even with the new pumping data, model fit and estimated parameter values were unsatisfactory. Reconsideration of the assumed homogeneity of the travertine plate permeability required re-evaluation of the widely accepted hydrogeologic framework of Capelli et al. (1987, 2005). A detailed field survey was conducted, focusing on upper travertine hydrodynamic features to detect more detail of the flow processes. The field survey involved collection of additional head data, better characterization of the outcropping lithology, and more detailed flow data everywhere, and especially in the quarry channels, representing the pumped water to evaluate the dewatering for every quarry company in detail. Within the travertine, two areas with diverse hydraulic conductivities were detected. The first corresponds to a restricted area showing a north–south open fracture system where large water flow occurs. This feature was simulated explicitly and hydraulic conductivities are labeled TRH in Figs. 3 and 4. The location shown in Fig. 3 was determined from a set of alternatives as the one that produced the best fit to data. The second area corresponds to the main travertine deposit where a general joint pattern enhances horizontal anisotropy. The new analysis suggested that TRL-KY (oriented in the north–south direction) was larger than TRL-KX (oriented in the east–west direction) and TRL-KZ, which were thought to be similar to one another. In addition, the new survey activity provided accurate site-specific flow values at PIL, B1 and B2 springs (Fig. 2), and for the first time provided a value for the ALB spring.

The resulting model had 31 defined parameters, which exceeded the 18 observations. Composite scaled sensitivities calculated using all 31 defined parameters are shown in Fig. 7a. The full height of each bar is the css value. These css values show that 15 parameters are unimportant (css less than 1 % of the maximum value of over 7 for any estimated parameter; or 0.05 was used as the cutoff) and of the remaining 16, 7 are important based on the signal to noise argument (css more than 1). The 15 unimportant plus four additional low sensitivity parameters were assigned to prior values or combined with other parameters, and were not considered individually in any other analysis presented in this work. During and at the end of model development, sensitivity analysis runs were routinely performed with all defined parameters activated and it was found that the same parameters were important and unimportant. The stacking of the css bars in Fig. 7a show that, in the absence of parameter interdependence, some parameters are dominated by the 13 head observations, and some, such as all of the DRK parameters, are dominated by the five flow observations.

The pcc graph (Fig. 7b) shows results for the final calibrated model. For all evaluations conducted during calibration, pcc indicated significant parameter interdependence for one parameter pair and within the a subset of four parameters, though the precise pcc values differed. For the final results in Fig. 7b, pcc was largest (pcc = −0.98) for the horizontal components of hydraulic conductivity for the alluvial and recent sediments (SED_KX and SED_KY), and these were lumped for parameter estimation. The resulting horizontal isotropy of 1.0 is consistent with the character of the sedimentary material, leaving 12 parameters to be estimated by inverse modeling. The largely interdependent set of four parameters includes FAL_KZ, ALB_DRK, B1_DRK and B2_DRK, where the latter two are conductances of two minor springs. Of the six pcc values that can occur given four parameters, four pcc values are greater than 0.85 for the final model run, and thus appear in Fig. 7b. The other two pcc values are just under 0.85. Interdependence between these parameters makes sense because the low permeability fault zones (with parameter FAL_KZ) are the “conduits” through which groundwater flows and is discharged to the aforementioned minor springs (with parameters ALB_DRK, B1_DRK and B2_DRK). The pcc values are commonly less than 0.95, so that, while a relation is evident, all four of these parameters were included in the inverse modeling. The inverse modeling was started from several alternative starting parameter sets. Similar parameter estimates and variances resulted, suggesting that the interdependence was not enough to cause uniqueness problems.

Leverage statistics (Fig. 8) equal 1.0 for the five spring flows and head PC. Inspection of dimensionless scaled sensitivities (the contribution from one observation to css, see Hill and Tiedeman (2007) for the equation; not shown here) were used to determine that each spring flow controls the parameter for the associated conductance, which is not a surprise and consistent with the stacked css results in Fig. 7a. The high leverage for PC was a surprise. The css results show that this is the only observation that provides information about two parameters: SED_KX and SED_KY. The negative pcc value for these parameters suggest that as long as the ratio or sum remain about the same, the same simulated equivalent to the PC head will be produced.

The lower values of leverage for most of the other head observations suggests that the information they provide is redundant or the observation provides little information. For the only two observations related to bedrock (EL and MG, see Fig. 3 for location), leverage is close to zero because the related dimensionless scaled sensitivities and css are close to zero (Fig. 7a). Thus, they do not contribute to the regression process of the final constructed model, which is not surprising because the main groundwater flow dynamics occur in the shallow travertine aquifer. The EL and MG observations occur in layer 2, but in the carbonate bedrock. Based on the css, leverage, and model fit results (Figs. 7 and 8 and Table 2), the values reflect only the nearby constant heads in layer 3. The SG head and P1–P7 mostly have fairly high leverage and the css graph shows that, with flows, they inform the travertine horizontal hydraulic conductivities (TRL_KX and TRL_KY), and vertical hydraulic conductivities of the continental deposits and the major fault zone (CLH_KZ and FAH_KZ).

The results of this work suggest that leverage, along with observation-stacked css graphs, are a good way to evaluate the importance of observations to inverse modeling. The alternative of using the observation contribution to the objective function to indicate observation importance and to determine how to weight the observations is suggested by Anderson et al. (2015, chapter 9). Here we simply note that this work provides an example of the utility of using css, pcc, leverage, and error-based weighting.

The final model fit for heads and flows (Table 2; Fig. 9) shows that there is less than one meter error in almost all heads (1 m is 2.5 % of the total 40 m of total head loss through this system) and less than 2 % of the observation in drains (only ALB is larger and about 14 %). The largest head residuals occur for alluvium and sediment observations PA and PC, which occur in model layer 1 along the southeast boundary of the model (Fig. 3).

Observed versus simulated heads for estimated parameters. A good model fit to observations is suggested when the values fall on the 1:1 line. The colors are as in the explanation of Fig. 7a (Dark blue head group “as”, Sky blue head group “sg”, Emerald green – head group “tr”, Black overlappingsymbols). Simulated and observed flows fall nearly on the 1:1 line and are omitted because they are large negative values and made the fit to heads indecipherable

Tests using three alternative scenarios and simulation of managed pumpage

The calibrated Tivoli-Guidonia Plain model produced the fit to observations shown in Fig. 9 and the simulated head distribution shown in Fig. 10. The model was tested using three other recharge and pumpage conditions described by La Vigna et al. (2012a). Briefly, they are: (1) simulation of pre-development state, prior to dewatering; (2) simulation of a very dry season related to year 2000; (3) simulation related to the August holiday season dewatering scenario. The model was then used in a resource management exercise to identify a dewatering scenario that required less pumpage. Basic features of and selected results from these simulations are provided in Table 3 and Fig. 11, and are briefly described here.

Head contours (dark blue) and simulated particle paths (light blue and yellow) for four simulations of the Tivoli-Guidonia model. Simulation details and results are listed in Table 3. Green cells indicate the river cells

Pre-development. The boundary conditions are the same as for model calibration, while the dewatering and the adjustments to recharge that represent the other withdrawals were removed. The resulting simulated water table is shown in Fig. 10a and is much smoother than the calibrated water table shown in Fig. 10. Groundwater is simulated to flow into the Aniene River, as is consistent with past anecdotal evidence. Similarly, the currently dry Acque Albule Springs is simulated to flow at a rate of 800 L/s, which again is consistent with anecdotal reports from the past.

Very dry season. During the driest season monitored in this area (year 2000), the rainfall recharge decreased by about 60–70 %. As a result, the Pozzo del Merro Lake level was measured to be 3–4 m lower, and the Acque Albule Springs Lakes decreased of about 2 m. To simulate these conditions, the constant head of the carbonate system was lowered from 80 to 76 masl, and the rainfall recharge was reduced by 60 %. The human perturbations (dewatering and withdrawals) were maintained. The output shows a general decline of heads and flow to surface-water bodies. On the west side of the system, water is simulated as flowing from Aniene River into the groundwater system, indicating a reversal of flow direction from the calibrated model; there are no observations or anecdotes to compare to this simulated result. The water level of Acque Albule Springs is simulated to be about 2 m lower, which is consistent with observed values (Capelli et al. 2005).

August holiday season dewatering. During August vacation, the dewatering wells operate at a much lower level. The distribution of the pumpage reduction varies between wells and year to year. Here, it is approximated by reducing the simulated pumping for dewatering by 30 %. Simulated results show a general rise of the water table in the dewatering area (Fig. 11) that closely matches measured values of La Vigna et al. (2012a). The level around the Acque Albule Springs rises about 0.8 m; there are no observations or anecdotes to compare to this simulated result.

Management. The model was used to determine if the required dewatering could be attained with a lower pumping rate. Presently, the dewatering is not organized; every quarry pumps to attain its local goal. In this scenario, the current position and dewatering amount of the 17 pumps was reorganized to 9 alternative pumping locations that were identified using the natural ground water drainage. The pumping rate of each of the nine pumps was optimized by trial and error. Simulated heads are shown in Fig. 11d. The output shows that with the new locations and pumping rate, the dewatering effect is very similar to the effects of current dewatering, while the water pumped is about 20 % less. Greater efficiencies might be found using formal methods such as those described by Ahlfeld and Mulligan (2000).

To show the impacts of the dewatering on flow directions, particle-tracking was simulated for the final calibrated model (Fig. 10), the three validation test scenarios (Fig. 11a–c), and the management scenario (Fig. 11d). Particles were started at the middle of the lower layer and tracked as they traveled vertically up through the confining unit to the surficial aquifer. The predevelopment simulation (Fig. 11a) shows flow paths without the dewatering. In all figures with dewatering (Fig. 10 and 11b–d), the pathlines are similar. The cross-sections in Fig. 10 show how water travels upward along permeable faults. Also shown are head contours in the lower layer caused by dewatering and thus lower heads in the surficial material.

Conclusions

This modeling project demonstrates starting with a simple model and adding complexity after new data collection which was motivated in part by early modeling results. The method required continual evaluation of what parameters were important and interdependent, and what observations dominated estimated parameters. Computationally frugal local sensitivity analysis provided the needed results.

The goal of model development is to provide important insights and accurate models. As designed, the model development described in this work yielded the following important insights about the system being studied.

-

1.

A long-held belief about the homogeneity of an extensive travertine deposit was questioned and large-scale heterogeneities were identified that better explain hydraulic conditions.

-

2.

Original estimates of dewatering withdrawals were found to be important using model sensitivity analysis and subsequently found to be inaccurate through close inspection.

-

3.

In all, 12 of 31 defined parameters of the final model were found to be most important using model sensitivity analysis, and reasonable values of the 12 were estimated by optimizing fit to observations.

-

4.

Parameter correlation coefficients revealed some parameter interdependence, but unique estimates were produced by imposing hydrogeologically supported isotropy criteria on some units.

-

5.

Of the 18 observations, model sensitivity analysis identified 6 that dominate the value of at least one of the 12 estimated parameters, which means that any observation error (measurement or epistemic error) will directly impact the value of at least one estimated parameter.

-

6.

Tests conducted for three alternative hydraulic conditions suggest good model performance.

-

7.

Simulated particle tracking and the importance of the parameters representing the vertical hydraulic conductivity of fault zones (FAH_KZ and FAL_KZ) determined by sensitivity analysis showed that the fractured superficial aquifer is strongly influenced by flow from the deeper system. This was hypothesized by La Vigna et al. (2012a, b), La Vigna (2009, 2011) and Capelli et al. (1987) and supported by the existence of hydrothermal groundwater upwelling to major and minor hydrothermal springs (La Vigna and Gnoni 2014).

These are the kind of insights for which any model development methodology strives. The developed model was used to analyze pumping for dewatering. The predicted quantities are similar to the observations used for model development, so the parameters informed and uninformed by the calibration are likely to be important and unimportant to the predictions, which bodes well for prediction accuracy. It was found that similar dewatering results might be achieved using 20 % less pumped water, but would require a substantial investment for the reorganization of the dewatering system to apply the model solutions (La Vigna et al. 2013b).

References

Ahlfeld DP, Mulligan AE (2000) Optimal management of flow in groundwater systems. Academic Press, London

Anderman ER, Hill MC, Poeter EP (1996) Two-dimensional advective transport in ground-water flow parameter estimation. Ground Water 34(6):1001–1009

Anderson MP, Woessner WW, Hunt RJ (2015) Applied groundwater modeling: simulation of flow and advective transport, 2nd edn. Academic, San Diego

Aster RC, Borchers B, Thurber CH (2013) Parameter estimation and inverse problems. Academic, Amsterdam

Baiocchi V, Cazzella R, Giannone F, Liso L, Vecchio M (2010) Metodologie topografiche integrate per il rilievo di dettaglio del pozzo del Merro (Sant’Angelo Romano) [Topographical integrated methodologies for the detailed survey of the Pozzo del Merro -Sant’Angelo Romano]. Atti della XIV Conferenza Nazionale ASITA, Brescia, Italy, November 2010, pp 107–112

Barlebo HC, Hill MC, Rosbjerg D (2004) Identification of groundwater parameters at Columbus, Mississippi, using three-dimensional inverse flow and transport model. Water Resour Res 40(4):W04211. doi:10.1029/2002WR001935

Barth GR, Hill MC, Illangasekare TH, Rajaram H (2001) Predictive modeling of flow and transport in a two-dimensional intermediate-scale, heterogeneous porous media. Water Resour Res 37(10):2503–2512

Best MJ, Abramowitz G, Johnson HR, Pitman AJ, Balsamo G, Boone A, Cuntz M, Decharme B, Dirmeyer PA, Dong J, Guo M, Ek Z, Haverd V, van den Hurk BJJ, Nearing GS, Pak B, Peters-Lidard C, Santanello JA Jr, Stevens L, Vuichard N (2015) The plumbing of land surface models: benchmarking model performance. J Hydrometeor 16:1425–1442. doi:10.1175/JHM-D-14-0158.1

Beven K (2009) Environmental modeling: an uncertain future? Rutledge, Abingdon, UK

Borgonovo E (2006) Measuring uncertainty importance: investigation and comparison of alternative approaches. Risk Anal 26(5):1349–1361. doi:10.1111/j.1539-6924.2006.00806.x

Borgonovo E (2007) A new uncertainty importance measure. Reliab Eng Syst Safe 92(6):771–784. doi:10.1016/j.ress.2006.04.015

Capelli G, Cosentino D, Messina P, Raffi R, Ventura G (1987) Modalità di ricarica e assetto strutturale dell’acquifero delle sorgenti Capore – S.Angelo (Monti Lucretili – Sabina Meridionale) [Recharge and structural setting of the Capore aquifer sources: S. Angelo (Lucretili Mounts – Southern Sabina)]. Geol Romana 26:419–447

Capelli G, Mazza R, Gazzetti C (2005) Strumenti e strategie per la tutela e l’uso compatibile della risorsa idrica nel Lazio: gli acquiferi vulcanici—quaderni di tecniche di protezione ambientale—protezione delle acque sotterranee [Tools and strategies for the protection and sustainable use of water resources in Lazio Region: volcanic aquifers—notes on environmental protection techniques—groundwater protection]. Pitagora, Bologna, Italy

Capelli G, Mastrorillo L, Mazza R, Petitta M, Baldoni T, Banzato F, Cascone D, Di Salvo C, La Vigna F, Taviani S, Teoli P (2013) Carta idrogeologica del territorio della Regione Lazio scala 1:100.000 – 4 fogli [Hydrogeological Map of Latium Region 1:100.000 scale – 4 maps]. Regione Lazio, Rome

Carrera J, Alcolea A, Medina A, Hidalgo J, Slooten LJ (2005) Inverse problem in hydrogeology. Hydrogeol J 13:206–222

Carter RW, Anderson IE (1964) Accuracy of current meter measurements. Am Soc Civil Eng J 89(HV4):105–115

Carucci V, Petitta M, Aravena R (2011) Interaction between shallow and deep aquifers in the Tivoli Plain (central Italy) enhanced by groundwater extraction: a multi-isotope approach and geochemical modeling. Applied Geochem. doi:10.1016/j.apgeochem.2011.11.007

Clark MP, Slater AG, Rupp DE, Woods RA, Vrugt JA, Gupta HV, Wagener T, Hay LE (2008) Framework for understanding structural errors (FUSE): a modular framework to diagnose differences between hydrological models. Water Resour Res 44:W00B02. doi:10.1029/2007WR006735

Clement TP (2011) Complexities in hindcasting models: when should we say enough is enough? Ground Water 49(5):620–629. doi:10.1111/j.1745-6584.2010.00765.x

Cook RD, Weisberg S (1982) Residuals and influence in regression. Chapman and Hall, New York

Dausman AM, Doherty J, Langevin CD, Dixon J (2010) Hypothesis testing of buoyant plume migration using a highly parameterized variable density groundwater model at a site in Florida, USA. Hydrogeol J 18(1):147–160

de Marsily G, Delay F, Gonçalvés J, Renard P, Teles V, Violette S (2005) Dealing with spatial heterogeneity. Hydrogeol J 13:161–183

Di Baldassarre G, Montanari A (2009) Uncertainty in river discharge observations: a quantitative analysis. Hydrol Earth System Sci Discuss 6:39–61

Doherty J, Christensen S (2011) Use of paired simple and complex models to reduce predictive bias and quantify uncertainty. Water Resour Res 47(12):W12534. doi:10.1029/2011WR010763

Doherty J, Hunt RJ (2010) Response to Comment on: Two statistics for evaluating parameter identifiability and error reduction. Journal of Hydrology. doi:10.1016/j.jhydrol.2009.10.012

Doherty J, Welter D (2010) A short exploration of structural noise. Water Resour Res 46:W05525. doi:10.1029/2009WR008377

Faccenna C, Funiciello R, Montone P, Parotto M, Voltaggio M (1994) Late Pleistocene strike-slip tectonics in the Acque Albule basin (Tivoli - Latium). Memorie descrittive della Carta Geologica d’Italia, 49. Istituto Poligrafico e Zecca dello Stato, Rome

Faccenna C, Soligo M, Billi A, De Filippis L, Funiciello R, Rossetti C, Tuccimei P (2008) Late Pleistocene depositional cycles of the Lapis Tiburtinus travertine (Tivoli - central Italy). Glob Planet Change. doi:10.1016/j.gloplacha.2008.06.006

Fienen MR, Krabbenhoft HD, Clemo T (2009) Obtaining parsimonious hydraulic conductivity fields using head and transport observations: a Bayesian geostatistical parameter estimation approach. Water Resour Res 45:W08405. doi:10.1029/2008WR007431

Finsterle S (2015) Practical notes on local data worth analysis. Water Resour Res 51(12):9904–9924. doi:10.1002/2015WR017445

Finsterle S, Preuss P (1995) Solving the estimation-identification problem in two-phase flow modeling. Water Resour Res 31(4):913–924

Foglia L, Mehl SW, Hill MC, Perona P, Burlando P (2007) Testing alternative ground water models using cross validation and other methods. Ground Water 45(5):627–641. doi:10.1111/j.1745-6584.2007.00341.x

Foglia L, Hill MC, Mehl SW, Burlando P (2009) Sensitivity analysis, calibration, and testing of a distributed hydrological model using error-based weighting and one objective function. Water Resour Res 45:W06427. doi:10.1029/2008WR007255

Foglia L, Mehl SW, Hill MC, Burlando P (2013) Evaluating model structure adequacy: the case of the Maggia Valley groundwater system, southern Switzerland. Water Resour Res 49. doi:10.1029/2011WR011779

Funtowicz S, Ravetz J (1990) Post-normal science: a new science for new times. Scientific European 169:20–22

Gingerich SB, Voss CI (2005) Three-dimensional variable-density flow simulation of a coastal aquifer in southern Oahu, Hawaii, USA. Hydrogeol J 13:436–450

Giordano G, Mattei M, Funiciello R (2009) Geological map of the Colli Albani Volcano, scale 1:50.000, In: The Colli Colli Albani Volcano. Special Publication of IAVCEI, 3. Insert, Geological Society of London

Good PI (2001) Resampling methods: a practical guide to data analysis. Birkhauser, Boston

Gupta HV, Clark MP, Vrugt JA, Abramowitz G, Ye M (2012) Towards a comprehensive assessment of model structural adequacy. Water Resour Res 48. doi:10.1029/2011WR011044

Harbaugh AW (2005) MODFLOW-2005. The U.S. Geological Survey modular ground-water-model: the ground water flow process. US Geol Surv Techniques Methods 6 A16

Hastie T, Tibshirani R, Friedman J (2009) The elements of statistical learning, 2nd edn. Springer Series in Statistics, Springer, New York, 745 pp

Helsel DR, Hirsch RM (2002) Statistical methods in water resources, chap A3. In: US Geological Survey Techniques of Water-Resources Investigations, book 4. Hydrologic Analysis and Interpretation, USGS, Reson, VA, 510 pp

Hill MC (2006) The practical use of simplicity in developing ground water models. Ground Water 44(6):775–781

Hill MC (2010) Comment on “Two statistics for evaluating parameter identifiability and error reduction” by John Doherty and Randall J. Hunt. Journal of Hydrology 380(3–4):481–488

Hill MC (2008) Data error and highly parameterized groundwater models. In: Refsgaard JC et al. (eds) Calibration and reliability in groundwater modeling. Credibility of modeling, Proceedings of the ModelCARE2007 Conference, Copenhagen, September 2007, IAHS Pub. 320, IAHS, Wallingford, UK, pp 316–321

Hill MC, Nolan BT (2011) Sensitivity analysis for inverse problems solved by singular value decomposition (SVD). In: Poeter EP, Hill MC, Zheng C, Maxwell R (eds) Proceedings of the 2011 MODFLOW and More Conference, Golden, CO, June 2011

Hill MC, Østerby O (2003) Determining extreme parameter correlation in ground-water models. Ground Water 41(4):420–430

Hill MC, Tiedeman CR (2007) Effective groundwater model calibration. Wiley, Hoboken, NJ

Hill MC, Faunt CC, Belcher WR, Sweetkind DS, Tiedeman CR, Davetski D (2013) Knowledge, transparency, and refutability in groundwater models, an example from the Death Valley regional groundwater system. Phys Chem Earth 64:105–116

Hill MC, Kavetski D, Clark M, Ye M, Arabi M, Lu D, Foglia L, Mehl S (2015) Practical use of computationally frugal model analysis methods. Groundwater. doi:10.1111/gwat.12330

Hunt RJ, Doherty J, Tonkin MJ (2007) Are models too simple? Arguments for increased parameterization. Ground Water 45(3):254–262

International Standard (2007) ISO 748:2007, Hydrometry: measurement of liquid flow in open channels using current-meters or floats. International Organization for Standardization, Geneva

Kavetski D, Clark MP (2010) Ancient numerical daemons of conceptual hydrological modeling, part 2: impact of time stepping schemes on model analysis and prediction. Water Resour Res 46:W10511. doi:10.1029/2009WR008896

Keating EH, Doherty J, Vrugt JA, Kang Q (2010) Optimization and uncertainty assessment of strongly nonlinear groundwater models with high parameter dimensionality. Water Resour Res 46:W10517. doi:10.1029/2009WR008584

Kirchner JW (2006) Getting the right answers for the wrong reasons: linking measurements, analyses, and models to advance the science of hydrology. Water Resour Res 42:W03S04. doi:10.1029/2005WR004362

Kucherenko S, Rodriguez-Fernandez M, Pantelides C, Shah N (2009) Monte Carlo evaluation of derivative-based global sensitivity measures. Reliab Eng Syst Safe 94:1135–1148. doi:10.1016/j.ress.2008.05.006

Kucherenko S, Tarantola S, Annoni P (2012) Estimation of global sensitivity indices for models with dependent variables. Comput Phys Commun 183(4):937–946. doi:10.1016/j.cpc.2011.12.020

La Vigna F (2009) Modello numerico del flusso dell’unità idrogeologica termominerale delle Acque Albule (Roma) [Groundwater numerical flow model of the hydrothermal unit Acque Albule (Rome)]. PhD Thesis, Roma TRE University, Rome. http://dspace-roma3.caspur.it/handle/2307/434. Accessed January 2016

La Vigna F (2011) Modello numerico del sistema idrogeologico delle Acque Albule (Roma): test di simulazione in condizioni critiche di ricarica [Numerical model of the hydrogeological system of Acque Albule (Rome): simulation tests on critical recharge conditions]. In: Polemio M (ed) Le modificazioni climatiche e i rischi e risorse naturali: strategie e criteri d’intervento per l’adattamento e la mitigazione [The climatic changes, risks and natural resources: strategies and intervention criteria for adaptation and mitigation]. CNR-IRPI, Bari, Italy

La Vigna F, Gnoni A (2014) Groundwater–geothermal preliminary model of the Acque Albule Basin (Rome): future perspectives of geothermal resources exploitation. Acque Sotterranee. doi:10.7343/AS-000-13-0000

La Vigna F, Mazza R, Taviani S, Teoli P, Capelli G (2007) Development of a modern hydrogeological monitoring network in urban contest: the case of Acque Albule Plain, central Italy, Latium Region, Rome. Geophys Res Abstr 9:1124

La Vigna F, Rossetto R, Mazza R (2009a) Ground water model calibration using geology information along with sensitivity analysis and estimation methods (UCODE-2005), the Acque Albule model, Rome (Italy). In: Calibration and reliability in groundwater modeling: managing groundwater and the environment. China University of Geosciences Press, Wuhan, China, pp 79–82